PART 2 : INDEX

SOCIAL MEDIA WARS : THE GAME OF TROLL

MAPPING THE TWITTER BATTLEFIELD

CASE OF ARTIFICIAL POLITICAL HASH AGENTS ON TWITTER

POLITICAL BUBBLES WITHIN FACEBOOK AQUARIUM

ARRESTS AND DETENTIONS

CONSEQUENCES

This is the second part of our story about information warfare. for first part, please read

MAPPING AND QUANTIFYING POLITICAL INFORMATION WARFARE : PROPAGANDA, DOMINATION & ATTACKS ON ONLINE MEDIA

– VI –

Social media wars : The Game of Troll

Initial concept of internet and its architecture promised us a decentralised and democratic possibility where every person is a medium. But 50 years later, not a lot of this dream is left. In reality, infrastructure and services became highly centralised, controlled by the internet service providers and gigantic internet companies such as Google or Facebook for example. Yes, we still have a chance to be the media, but in most cases only within the bigger social and media structures, owned and controlled by someone else. But still, even in this case it is much more harder for governments or political actors to have control over media than 20 years ago, when nodes that they needed to control were highly centralised around just a few national TV stations and newspapers. In that sense, a new form of battleground appears with the birth of the social media.

It is a battle for domination over the individual nodes (people) and their social graphs.

By instrumentalizing and conquering individual nodes, they are able to interfere and influence their social graph (see: Human Data Banks and Algorithmic Labor, SHARE Labs 20161) consisted of their social circles, hundreds of friends, colleagues and relatives. This doctrine is about conquering information streams of others through proxies. Social network ecosystems are fertile ground for different form of disinformation or smear campaigns against opponents, or just a cheerleading activities, depending on the style of the political warfare. In such environment, political propaganda (spreading of ideas, information, or rumor for the purpose of helping or injuring an institution, a cause, or a person 2), can be executed through individual nodes that are anonymous or without visible, direct connection of their real-life identities to a political party.

Mapping the Twitter battlefield

When it comes to personalizing the actors and marking their influence in a certain network, the Social Network Analysis (SNA) is the horse to bet on. This method has been used in different forms and variations thereof, and it is based on graph theory as a scientific discipline.

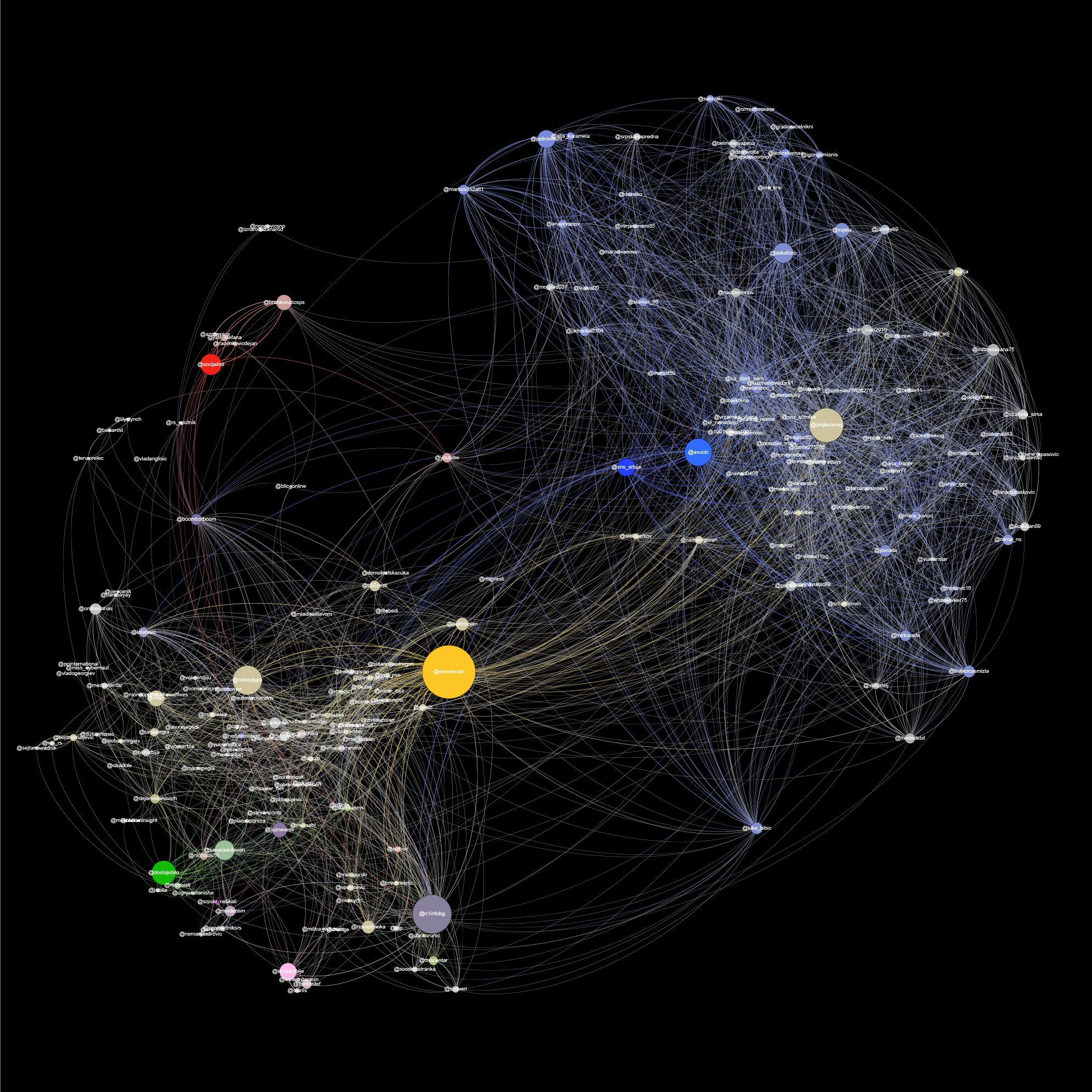

Social network analysis based on the corpus of all interactions containing hashtag #izbori2016 (#elections2016) during pre-election period in Serbia

How to read this graph?

When looking at the presented graph there are a couple of things one should keep in mind. The nodes in the network are people/entities (organizations), and the edges are the interactions between them. (1) The size of the node reflects the amount of interactions (tweets to, replies) that particular node has been into, it is a sum of the incoming and outgoing interactions. (2) We colored the biggest nodes of the political organizations (thus coloring their immediate neighbors in the network with a less intensive variant of the same color) according to the political structure they represent.

What this graph shows is in many ways a reflection of Serbian society, or at least of the Twitter community in Serbia. It is strongly polarised, with the ruling party being on one side (the blue node), and the rest on the other side; with a few nodes gravitating on the sides, not meaning they are neutral, rather that they have a micro cosmos in which they operate. In Serbian political rhetoric, for a while now, the tone of “us” vs. “them” is very much present. With the necessity for self-victimization and populism, being among the strongest traits of modern Serbian politicians, the general tone of the 2016 election campaign was much more negative (towards the opponents) than affirmative (towards their own program and promises).

We can easily spot two different types of troll-lord activities on this graph.

Case of artificial political hash agents on Twitter

Except the real human troll lords, by using social network analysis we can spot the traces of the primitive artificial actors trying to participate in the information warfare.

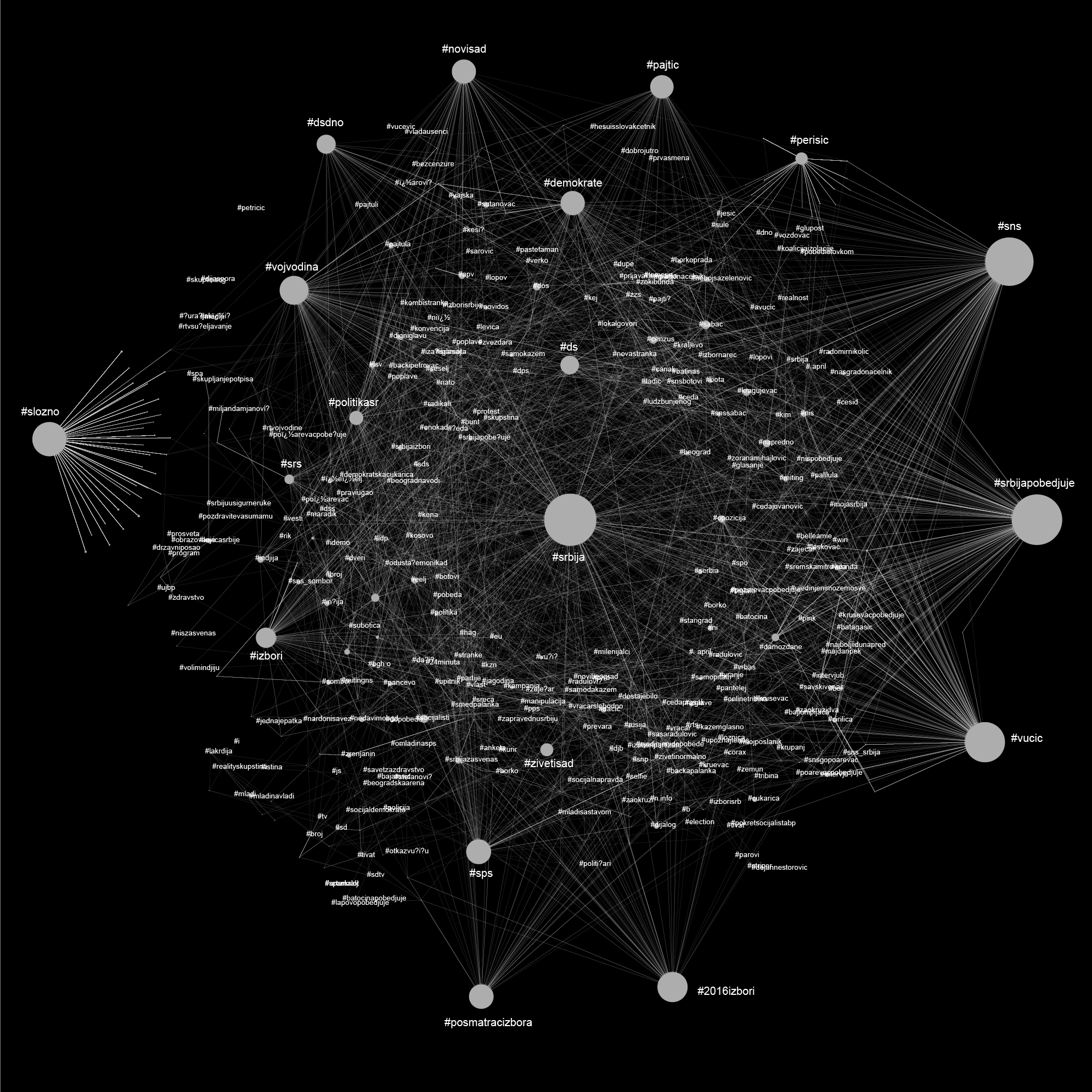

The phenomenon of this case can be clearly seen in the left side of the graph below.

Social network analysis based on the corpus of all hashtags that are appearing together with hashtag #izbori2016 (#elections2016) during pre-election period in Serbia.

Political bubbles within Facebook aquarium4

World largest social network, deeply dissected in our previous research, populated with more than half of the Serbian citizens5, is probably one of the biggest ecosystems in information warfare. Without intention to go too deeply into the subject of Facebook itself being active agent, non neutral player with ability to shape, alternate or censor political discussions, we can just state that the interface, structure and algorithms behind Facebook are dictating the rule of this warfare.

Eli Pariser in his book Filter Bubble claims that users get less exposure to conflicting viewpoints and are isolated intellectually in their own informational bubble created by Facebook algorithms selectively guesses what information a user would like to see based on information about the user. This phenomenon also known as an “echo chamber” a metaphorical description of a situation in which information, ideas, or beliefs are amplified or reinforced by transmission and repetition inside an “enclosed” system, where different or competing views are censored, disallowed, or otherwise underrepresented.

In other word, this creates a highly politicised space, but at the same time this partly user- partly algorithmic-governed bubbles can easily be chambers where people with the same political affiliations are just discussing between each other without exposure to other political point of views.

We collected data from official Facebook pages of 20 political parties in Serbia and conducted different data analysis (see: link) but, for this analysis we will present the one quantifying number of users and number of interactions ( like, share, comment ) they had with official pages of political parties.

On this charts each circle represents a single user that had interaction with official pages of political parties. Size and color of squares are proportional to the number of interactions. Such approach allows us to observe the relationship between users who do a large number of interactions (marked in black are users with more than 100 units of interaction with the party) and users with fewer (gray).

This graphs can help us to feel the size of the political online propaganda machine in each party. It is reasonable to claim from our point of view that users that have more than 100 interactions (black bubbles) with one party during 5 week period can be considered as an active participant, agents of political propaganda spreading political agenda within their networks of friends.

As we saw in previous chapters when we spoke about mainstream online media, that space is already conquered and dominated the ruling party and government propaganda and field of public discussion within comments contaminated by the orchestrated trolling armies. In this light, Facebook is obvious choice for newly formed opposition parties to promote their agenda and communicate with their base. This can be clearly seen on the presented chart, where two biggest groups are young opposition parties (Dveri,DJB). Even they dominated Facebook sphere by taking almost 50% of the interactions, on election they were really tight over census (5%).

– VII –

Arrests and detentions

“They had come to a time when no one dared speak his mind, when fierce, growling dogs roamed everywhere, and when you had to watch your comrades torn to pieces after confessing to shocking crimes.”

Animal Farm, George Orwell

In the wake of 2014 floods, when it became clear that pro government tabloids would face no public scrutiny or official distancing for their scaremongering and false reports, a number of social media users were called in by the police for questioning. They were threatened with charges for “spreading panic” by posting rumors about the scope of the disaster and consequences of the public officials incompetence.

Whether the confusion about the legal provision that deals with “inducing”, not “spreading” the panic, was made on purpose or not, it proved to be a handy tool for the actual spreading of online chill. Though their statuses were posted on private social media accounts, and no actual panic resulted from it, among the detainees there were an 18-year-old guy, a father of two, a fashion makeup artist. All in all, the first wave counted at least fifteen cases covering greater Belgrade area.6 In the following months, reports were emerging about threats with charges against a couple of local online and radio journalists from towns and smaller communities in the country, all connected to the floods.

By the fall of the same year, the Center for investigative reporting published testimonies of people that were held in custody and later charged for an “attempted” inducing of panic.7

In the years that followed this kind of ‘regulating’ not only the comment sections and social media posts but the entire public sphere, was used on various occasions, though not all cases get news coverage. The latest happened in October 2016, when a mock-up politician who surprisingly made it to a town council, was called in for questioning on account of ‘panic’ for protesting unresolved local issue of drinking water.8

Another convenient legal ground for shaping up public discourse is to read threats into online comments, supposedly aimed at the prime minister or any other prominent figure.9 Again, there is no definite number of such cases, but the trend can be detected through splashy headlines that occasionally promote ‘cyber police’ skills in catching the ‘hackers’.

Both methods yield little effect in formal judicial procedure, while many of the cases will probably drag about until finally dropped or their statute of limitations expire. Much more interesting is their result in adjusting the public notions of what can be said out loud and what are the boundaries of private space where one can freely express their anger, frustration, and alike.

Consequences

What are the real consequences of cyber attacks on online media and journalists in Serbia? Most of the cases of content disappearance and DDoS attacks do not have long term consequences related to the content itself. As John Gilmore, one of the founders of EFF, famously stated “The Net interprets censorship as damage and routes around it”. Content that has been taken off the network is often multiplied on different places, republished by other blogs or online media websites and it attracts even more readers.

Insecurity and Fear

We can claim that the main consequence of these attacks is a raise of the insecurity and fear, resulting in a chilling effect on the freedom of expression online. The fact is that publishing content that criticizes the structures of power (government, criminal groups or any other power) can result in the destruction, blocking or temporary disappearance of a website, followed by large amounts of stress and expensive working hours to restore the system, which can impact the willingness of people to express themselves freely. In cyberspace, the defense is usually more expensive than the attack. This can be highly discouraging for small and independent online and citizen media that cannot afford costly cyber security experts or technical solutions to protect themselves. According to Morozov10, DDoS assaults put heavy psychological pressure on content producers, suddenly forcing them to worry about all sorts of institutional issues such as the future of their relationship with their Internet-hosting company, the debilitating effect that the unavailability of the site may have on its online community, and the like.

A somewhat positive outcome of this trend was that some of the more professional media organizations implemented DDoS mitigation systems, such as CloudFlare. In recent months the DDoS attacks continued, but as opposed to those in 2014, these are much more sophisticated, using large and more persistent botnets that have the ability to go around free versions of CloudFlare and shut the servers down.

Chilling effect on the general public

Arresting individuals because of their blogs, comments, or other forms of writing online has a chilling effect not just on the journalists and online media organizations, but on the general population of online users in Serbia, reaching 60% of Serbian citizens. Therefore, it seems that citizens do not feel empowered and protected in the digital environment, which reduces the potential use of new technologies. It is expected that the numerous legal proceedings commenced by the state in the past year would further enhance the chilling effect on online speech.

Privacy violation and surveillance

Targeted attacks on the personal and professional communication and working tools such as emails, online documents and databases can endanger the anonymity of sources, reveal investigation plans or can be used to discredit the attacked victim by publishing private information, as well as identity theft. Reaching the necessary level of digital security often implies complex procedures, change of usual habits related to the use of technology that can lead to smaller efficiency of journalist and organization in general.

Discouraging public dialog

The scale of manipulation of public opinion with the use of technical tools orchestrated by political party members resulting in flooding with comments and statements on the main news portals and social networks is transforming open spaces for dialog and expression of opinion online into the fields where only one opinion can be heard, thus creating a false image of the public opinion. This artificial noise makes the true voice of the individual almost impossible to hear, which discourages dialog about topics important to society.

In the Declaration on respecting Internet freedoms in political communication, Share Foundation together with 200 respected national organizations and experts, pointed out that cases of internet censorship, attacks on the websites and private accounts represent violations of human rights and that they are against the Constitution of Serbia and law.

Failed to protect

We can claim that the Government has failed to protect the online media and citizen journalists in Serbia. We are aware that the relevant state bodies have limited technical and organizational capacities for a more efficient reaction in certain situations. However, what is really dangerous is that the reactions of relevant public bodies (prosecution, police and judiciary) vary from case to case, sometimes they are very efficient and sometimes very slow and without a proper response.

Extremely slow or complete absence of reaction of the state authorities is in most cases related to the cyber attacks on online media, investigative journalists and citizens’ media critical of the government. In the past year, Share Foundation took an active role in monitoring, conducting cyber forensic analysis of the attacks on online media and provided the authorities with numerous documents, but none of the major cases of attacks on those media has resulted with an arrest or even clear statement from the authorities. This practice discourages citizens and online media organizations to believe that they will be protected by the state. Absence of proper reaction opens space for theories that different power structures within the state don’t even have an interest for those cases or cyber attacks to ever be solved.

On the other hand, relevant public bodies proved to be very efficient in the arrests and judicial proceeding against social media users and bloggers (the Malagurski case and cases of inducing panic during the floods). All of those aspects together produce a lack of legal certainty in this area and unsatisfactory level of rule of law.

< PART 1 : Mapping and Quantifying political information warfare : PROPAGANDA, DOMINATION & ATTACKS ON ONLINE MEDIA

DETAILED MONITORING DATA SETS, RESULTS AND ANALYSIS CAN BE FOUND HERE:

MONITORING OF ATTACKS

MONITORING OF ONLINE AND SOCIAL MEDIA DURING ELECTIONS ( IN SERBIAN )

MONITORING REPORTS

CREDITS

VLADAN JOLER – TEXT, DATA VISUALIZATION AND ANALYSIS

MILICA JOVANOVIĆ – TEXT

ANDREJ PETROVSKI – TECH ANALYSIS AND TEXT

THIS ANALYSIS IS BASED ON AND WOULD NOT BE POSSIBLE WITHOUT TWO PREVIOUS RESEARCHES CONDUCTED BY SHARE FOUNDATION:

MONITORING OF INTERNET FREEDOM IN SERBIA (ONGOING FROM 2014) BY SHARE DEFENSE – PROJECT LEAD BY ĐORĐE KRIVOKAPIĆ, MAIN INVESTIGATOR BOJAN PERKOV AND MILICA JOVANOVIĆ.

ANALYSIS OF ONLINE MEDIA AND SOCIAL NETWORKS DURING ELECTIONS IN SERBIA (2016) BY SHARE LAB – DATA COLLECTION AND ANALYSIS DONE BY VLADAN JOLER, JAN KRASNI, ANDREJ PETROVSKI, MILOŠ STANOJEVIĆ, EMILIJA GAGRCIN AND PETAR KALEZIĆ.

- Human Data Banks and Algorithmic Labour, SHARE Labs, 2016

- Media’s Use of Propaganda to Persuade People’s Attitude, Beliefs and Behaviors

- Gephi plugin that piped all tweets containing #izbori2016 to Gephi and our database.

- In the Facebook Aquarium: The Resistible Rise of Anarcho-Capitalism, Ippolita

- Half of Serbia Now on Facebook, September 28, 2012

- Na saslušanje zbog Fejsbuka, May 23, 2014

- Ispovesti uhapšenih zbog komentara na Fejsbuku, October 15, 2014

- USIJAN FEJSBUK Preletačević pozvan na informativni razgovor i ostao bez Fejsbuk profila, October 17, 2014

- Priveden zbog pretnji Vučiću, August 17, 2013

- “Whither Internet Control?” Evgeny Morozov